Automated Visual Evaluation (AVE) for cervical cancer screening

Your research has focused on HPV natural history and cervical cancer prevention, especially in lower-resource settings. What is new to report to HPW readers?

While eagerly awaiting the results of one-dose HPV vaccine trials, we are particularly interested in validating complementary screening approaches concentrating on two areas: 1) Self-sampled, rapid HPV testing that distinguishes clinically distinct type groups useful for management decisions (HPV16; HPV18/45; HPV31/33/35/52/58, and the lower risk HPV39/51/56/59/68); 2) The use of "artificial intelligence" (more accurately, machine-learning) interpretation of smartphone images as a decision aid for health workers performing Visual Inspection with Acetic Acid (VIA).

What is the role of machine learning as an adjunct to VIA?

Given the COVID-19 pandemic, when and where screening is judged to be sufficiently safe, it is important that any screening encounter that we recommend is very economical but also accurate, leading to clinical management of only a small group of women with a high probability of precancer.

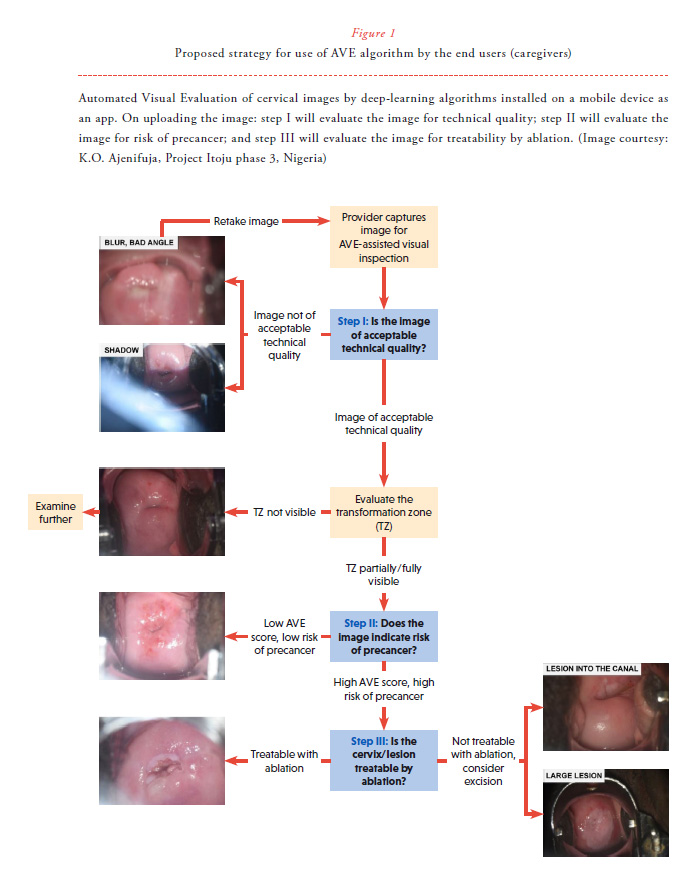

In collaboration with Global Health Labs, our NIH-based team and collaborators are developing and validating machine-learning algorithms that identify precancer from cervical images obtainable from smartphone (Figure 1). The algorithm will be an app on a dedicated smartphone camera, and the health worker will take a digital image and immediately (in 30 seconds without access to the internet) get a "second opinion" on what the cervical appearance indicates.

The health care worker will remain the ultimate judge of clinical management. The output is a continuous score from 0 to 1, indicating an increasing chance that the image represents precancer. We call this method “Automated Visual Evaluation (AVE)". AVE has shown very promising initial results in identifying underlying histologic precancer based on old-style film images (from the 60,000-image "cervigram" archive of the Guanacaste Project).1 The accuracy we observed, for primary screening or triage of HPV-positive women, was better than human interpretation of cervical images and better than three kinds of cytology. With the National Library of Medicine and our collaborators at Rutgers, we have shown that similar machine-learning is possible when using a modified smartphone.2

What are the main challenges in scaling-up of AVE as a screening tool?

To be scaled up, AVE needs to be transferred to widely available and relatively inexpensive devices. One convenient approach would be to use dedicated smartphones running AVE software applications and repurposed to act as medical devices. As the central challenge, the development of a robust algorithm for a smartphone requires training of the algorithm to recognize precancer based on the kind of processed images produced by smartphones. Each one varies in the kind of color and focus produced, and in other more subtle ways (Figure 2). Deep learning needs "big data", in this case, training requires a very large collection of cervical images labeled as to the underlying truth of whether precancer is present. As we all know, the appearance of precancer can be subtle and easily confused with signs of HPV infection and inflammation. We try to be as restrictive as possible on what we call precancer when training the algorithm, preferably we restrict CIN3 or AIS histology with HPV typing showing one of the best established 13 carcinogenic types. Also, we are designing an ancillary pre-application that makes sure the cervical image is adequate for evaluation, and a third post-application that assesses whether ablation is possible or excision is required. Finally, women living with HIV can have particular difficulty controlling HPV infection, with resultant complex cervicovaginal patterns that must be taken into account.

What are the main steps that you are taking to address some of these issues?

The US National Cancer Institute (NCI), in partnership with the US National Library of Medicine (NLM), Global Health Labs, Unitaid, Clinton Health Access Initiative (CHAI), and many international collaborators is coordinating a multi-site effort to collect thousands of digital images of the cervix linked with a histologic diagnosis from around the world.

Can you describe the AVE colposcopy network?

We are organizing an international collaborative effort to pool existing archives of digital cervical images. The greatest source of such images are colposcopy images associated with histological diagnosis. Although they are not smartphone images, they are close enough to be very useful in understanding general principles of AVE. Interested centers (with a collection of at least 300 good-quality colpo images [still photographs or snapshots of video]) are invited to be part of the colposcopy network. We want to stress that we have no IP stake in this science, we are working strictly in the interest of public health, and are developing no commercial product.

DISCLOSURE

The authors declare no conflicts of interest. NCI has received cervical screening assays and testing at reduced or no cost, for independent NCI evaluation, from Qiagen, Roche, BD, MobileODT. Decisions to publish and content of publications are free from commercial involvement, and there are no relevant financial interests, as monitored yearly by NIH Ethics Office.

References

1. Hu L, Bell D, Antani S et al. An Observational Study of Deep Learning and Automated Evaluation of Cervical Images for Cancer Screening. J Natl Cancer Inst 2019; 111(9):923-932. Available from: http://www.ncbi.nlm.nih.gov/pubmed/30629194

2. Xue Z, Novetsky AP, Einstein MH et al. A demonstration of Automated Visual Evaluation (AVE) of cervical images taken with a smartphone camera. Int J Cancer [Internet]. 2020 Apr 30. Available from: https://onlinelibrary.wiley.com/doi/abs/10.1002/ijc.33029